ONNX

The TensorONNX plugin integrates ONNX (Open Neural Network Exchange) model support into Tellusim SDK, enabling model loading, execution, and tensor management through the TensorGraph runtime. It supports dispatching ONNX computation graphs on GPU devices with optional timing queries and reduced precision formats.

info

The plugins/parallel/onnx/extern/lib directory contains prebuilt Nanopb libraries for parsing ONNX protocol buffers.

#include <parallel/onnx/include/TellusimONNX.h>

Example

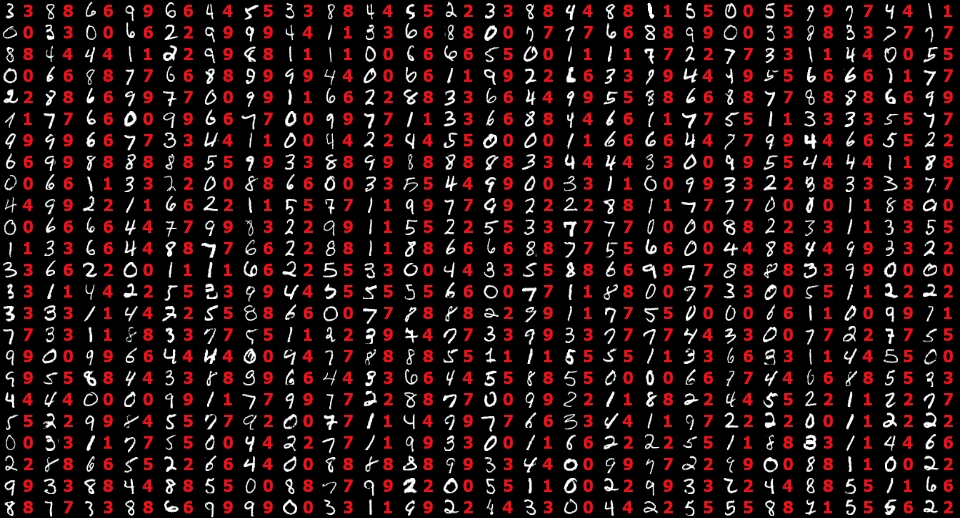

The following example performs real-time MNIST digit recognition:

// create tensor graph

TensorGraph tensor_graph;

if(!tensor_graph.create(device, TensorGraph::FlagsAll, TensorGraph::MasksAll, &async)) return false;

// load model

TensorONNX tensor_onnx;

if(!tensor_onnx.load(device, DATA_PATH "model.onnx", TensorONNX::FlagQuery)) return false;

// copy texture to input tensor

tensor_graph.dispatch(compute, input_tensor, texture);

// dispatch model

Tensor output_tensor(&output_buffer);

if(!tensor_onnx.dispatch(tensor_graph, compute, output_tensor, input_tensor, tensor_buffer)) return false;